Models are Worse at Persona-Following than You Think

As Al systems have been increasingly deployed over the past years, challenges have emerged with respect to their behavior in interaction (or lack thereof) with human users. This includes models causing Al psychosis and ignoring instructions to ask for permission and deleting production databases. The ML research community, acknowledging the importance of interactivity, has incorporated interactivity factors in evaluation of agents in environments such as tau2-bench (customer service), CRMArena-Pro (enterprise), and Salesbot (sales). They incorporate user simulators, or models that are tasked to act as a human would in a scenario, to test the ability of models to complete tasks given human ambiguity and behavior.

However, a natural question arises: how can we evaluate and improve the realism of these user simulators themselves? Existing environments assess simulators with coarse metrics such as naturalness, high-level persona compliance, and high-level erroneous behavior. On closer inspection, we find that simulators behave in a non-human like manner and show low behavioral variation.

We propose persona following as a new goal for LLM training, and ground it in a cognitive-science based framework for defining and evaluating personas for simulators. As with existing efforts in coding, academic math, and agentic capabilities, focusing on persona following is a valuable and technically compelling effort to ensure interactivity in models.

We select one important enterprise use case of persona following: embodying customers in sales. We develop and release Sales Sim, an e-commerce sales simulation environment for human agents and sales agents, featuring an automated human-validated evaluation methodology, and

A Call for Research in User Simulators

We highlight the below as following directions on research in user simulators:

- Direction 1: Research in incorporating user simulators as part of training and evaluation regimes across domains such as multi-turn coding, UX design, recruiting, and sales.

- Direction 2: Training methods for persona-following, which we define as the capability of models to embody arbitrary personas in its responses. We propose a framework for defining personas in Diagram 1.

- Direction 3: Research on defining and training user simulators to embody culturally diverse and non-English speaking personas

SalesSim: A Testbed for Sales Interactions in E-Commerce

We introduce a test bed for persona following in sales, where customers must express communication shaped by latent preferences and sales agents must their approach based on personality, customer background, and level of product knowledge. We extend the laptop split of **Salesbot** (Murakhovs'ka et al), a testbed for catalog-grounded sales inspired by real product-support agents in e-commerce stores and aggregators such as Best Buy. Laptops provide an ideal domain for interactive evaluation, given the many factors that contribute to consumer needs and the multiple axes for personalized sales communication (e.g. varying knowledge levels and the relative importance of different specs).

A Framework for Defining Personas in User Simulators

Inspired by the Cognitive-Affective Personality System Framework (CAPS) we define the below framework for persona definition.

- Character Traits: Character traits are constructs that are relatively stable throughout the life of a person, including personality traits, values, and beliefs.

- Explicit Behavior patterns: These are explicit behaviors that the person exhibits, which falls under the umbrella of behavior scripts in CAPS. These include habits, speaking patterns, actions and types of verbage used when triggered by a certain situation.

- Past Experiences and Knowledge: These are categories or constructs an individual uses for the self, other people, events, and situations (both external and internal), and the experiences that shape these constructs. These include:

- Identity-related constructs - name, age, groups that the persona self-identifies with.

- Relational graphs

- Knowledge of various topics.

- Experiences and memories.

- Task-specific Scenario Information: This is the information that informs behavior for the specific task that the simulation will be used in, which often involves goals, preferences, and success criteria.

Diagram 1: Framework for Personas Definition in User Simulators

Applying this to human agents, for character traits, we use the Big Five personality framework, which consists of Extraversion, Agreeableness, Conscientiousness, Neuroticism, and Openness to Experience. We define speaking style as an explicit behavior pattern, and provide an initial backstory alongside a specific knowledge level of laptops at the time of interaction for past experiences and knowledge. Finally, for task-oriented scenario information, we define laptop-related preferences and dealbreakers and starting emotion for each sales scenario.

Evaluating Persona-Following in Human Agents

A good shopper simulator should be able to express preferences, interact with the Al salesperson, and make decisions aligned with its consumer preferences, dealbreakers, and personality traits. To this end, we propose evaluation dimensions and a scoring rubric, categorized by level of importance driven by their impact on behavior in sales conversations. Most dimensions are scored with 0 or 1 due to known issues with calibration of large language models.

Critical Dimensions

- Role-appropriate comprehension on product class: The Al shopper demonstrates appropriate technical understanding of laptops throughout the conversation to that specified in its persona. Scoring rubric: 0 (Inappropriate) or 1 (Appropriate).

- Adherence to user intent: The human agent sufficiently describes and works towards fulfilling the user intent and success criteria of the task over the course of the conversation, including timely and context-appropriate articulation of preferences and dealbreakers. Scoring rubric: 0 (Issues with adherence) or 1 (Perfect Adherence).

- Adherence to Big Five Traits: The human agent's utterances and actions throughout the dialogue are consistent with the assigned Big Five Personality Traits (e.g., an "Extremely Conscientious" customer exhibits meticulousness and orderly communication). Scoring rubric: high, neutral, and low (refer to corresponding graders for definitions).

- Decision alignment with User's Success Criteria: The human agent makes a final decision (e.g., making an intent to buy on a particular laptop, or leaving the conversation without intent to buy) that is consistent with the preferences and dealbreakers specified in their persona. Scoring rubric: 0 (Misaligned) or 1 (Aligned).

Ancillary Dimensions

- Plausible emotion expression: The emotional valence expressed by the human agent in its responses are plausible given the current context of the conversation and the assigned starting emotion. Scoring rubric: 0 (Implausible) or 1 (Plausible).

- Profile consistency across turns: The human agent maintains a consistent persona across all turns, ensuring that demographic facts and backstory elements do not change arbitrarily. Scoring rubric: 0 (Inconsistent) or 1 (Consistent).

Smoke testing

In addition to the core evaluation dimensions, smoke tests are provided as table-stakes evaluation dimensions useful for debugging across-dimension and potential root causes for Al shopper issues.

- Reasonable utterance length: The human agent conveys a reasonable amount of information per utterance.

- Repetition Fluency: The Al shopper does not repeat information excessively, or displays frustration when doing so.

- Social Commonsense Reasoning

- Consideration of more than one recommendation: The human agent demonstrates engagement by considering multiple product options presented by the sales agent, rather than fixating on or prematurely accepting the first suggestion.

- Product Catalog Hallucination: The human agent does not hallucinate facts about the products or store policies mentioned by the shopper.

To support automatic development of customer agents, eight hand-crafted, human-validated model graders for the critical dimensions are released, with plans to release graders for the rest of the dimensions.

| Decision alignment with Persona's Success Criteria (Cohen's Kappa) | Role-appropriate comprehension about the task (Cohen's Kappa) | Adherence to customer intent (Cohen's Kappa) | Adherence to Big Five Traits (Pearson's Correlation) |

|---|---|---|---|

| 0.8 | 0.71 | 0.9 | 0.53 |

Diagram 2: Human-model agreement for critical dimensions

Initial Results on Persona-Following in Sales Sim

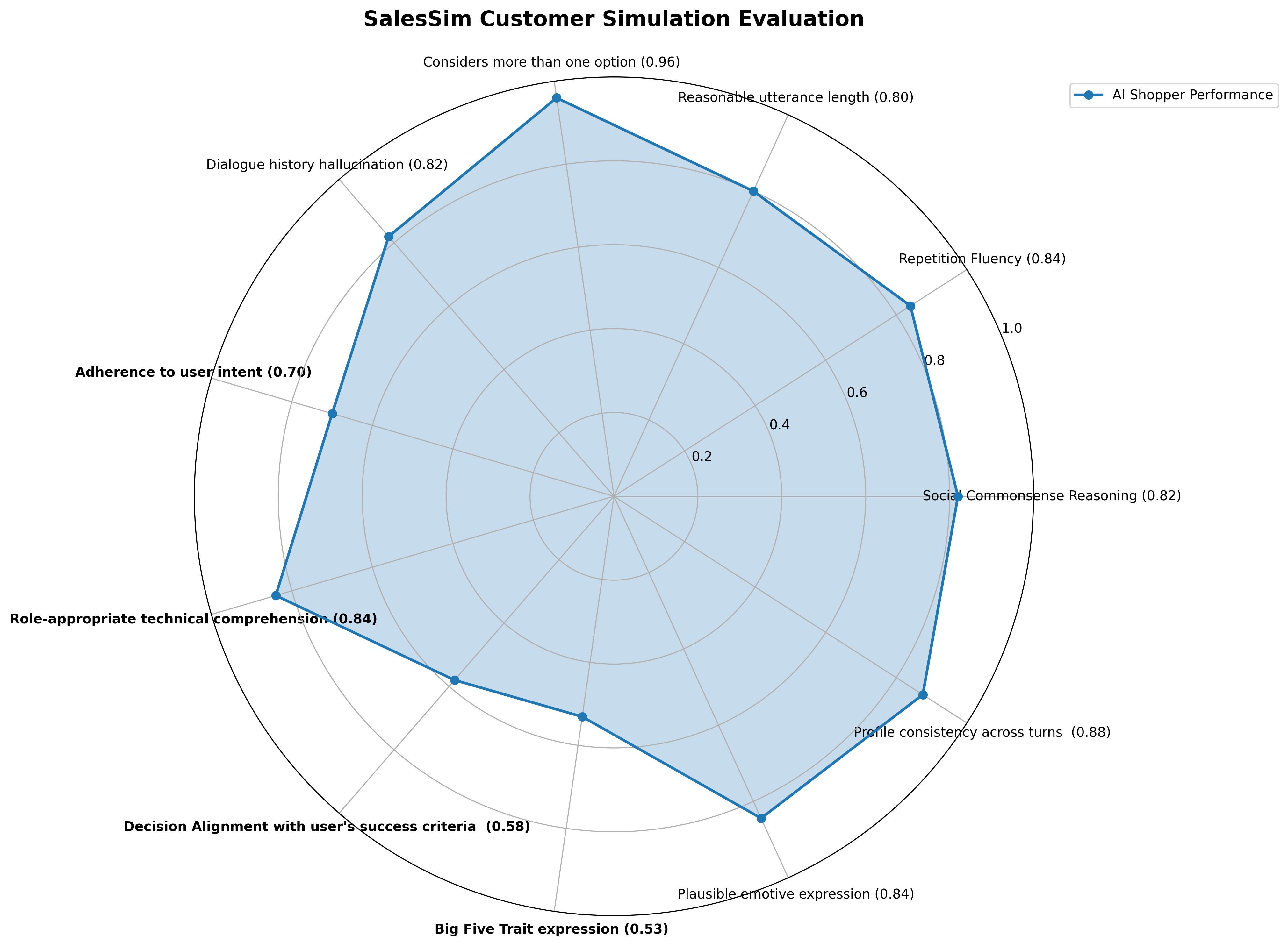

Mistral-3.1-Small was chosen to benchmark due to its reported persona following strengths and superior performance compared to proprietary models in initial experiments. The figure below shows the performance on each dimension over 40 rollouts.

Figure 1: Customer simulation evaluation for SalesSim. Critical dimensions are in bold.

Surprisingly, Mistral-3.1-Small struggles with dimensions that prevent it from being used as a valid customer simulator, including the ability to disclose preferences and dealbreakers, make decisions aligned with customer preferences, and embody varied personality types. Out of the critical dimensions, 65% of sampled interaction rollouts in SalesSim contain critical errors.

A Takeaway for the Community

Given these results, we encourage the community to:

- Focus on Persona-Following Research: Focus research efforts aimed at improving the capability of LLMs to faithfully embody complex, multi-dimensional personas, especially in goal-oriented, interactive environments.

- Adopt Rigorous Evaluation: Integrate frameworks like our CAPS-inspired model and critical/ancillary dimensions into standard agent evaluation pipelines to ensure user simulators, and ultimately the agents being evaluated, can work for, with, and alongside humans.

Opensource Release

We opensource SalesSim at our Github page, alongside usersimeval, a lightweight tool to help with evaluation and error analysis of user simulation rollouts. We invite contributions and issues to both SalesSim and usersimeval, especially with adding more product categories to SalesSim and more graders for open-ended user simulators to usersimeval. We are excited about centering user simulators in research, and the downstream usage of user simulators in improving ML systems.

Ethics

The development and use of user simulators must be guided by a clear ethical statement prioritizing user well-being, fairness, and transparency. This necessitates careful design to prevent the perpetuation of bias and to ensure that models accurately reflect diverse user populations.

Acknowledgements

We acknowledge Pranav Narayanan Venkit and Yu Li for their feedback to this project.

Citation

@misc{personafollowing2025,

title={Models are Worse at Persona-Following than You Think},

author={Pruksachatkun, Yada and Wu, Chien-Sheng and Xiong, Caiming},

year={2025}

}